AI Recruitment: How ClurQ Improves Talent Quality

Across technology hiring ecosystems, conversation around artificial intelligence often centres upon speed. Faster sourcing, faster screening, faster closure. Yet top-performing organisations increasingly recognise speed without quality compounds risk rather than value. Talent quality now defines competitive differentiation, delivery stability, and long-term workforce resilience. AI recruitment therefore shifts focus away from automation volume toward decision accuracy.

Talent quality extends beyond credential strength. Real quality reflects problem-solving depth, execution reliability, learning velocity, and intent alignment. Traditional hiring frameworks struggle capturing these dimensions consistently. Resume-led screening rewards keyword proximity. Interview-heavy evaluation favours articulation confidence. Both mechanisms generate weak correlation with real-world performance.

AI recruitment platforms succeed only when designed around quality amplification rather than process acceleration. High-impact systems surface signal earlier, reduce noise, and enforce structured evaluation discipline across stakeholders. Artificial intelligence becomes decision support, not decision replacement.

ClurQ operates within this philosophy. Its AI recruitment approach emphasises structured hiring intelligence, capability validation, and governance clarity across workflows. This foundation establishes why AI recruitment success depends upon system design choices. Understanding how ClurQ applies artificial intelligence across requirement framing, screening logic, and evaluation discipline naturally continues within the next section without narrative interruption.

Translating Role Ambiguity Into Structured Hiring Intelligence

Building upon quality-first AI recruitment principles, ClurQ addresses the earliest failure point within hiring systems: role ambiguity. Most hiring quality erosion originates before sourcing begins, when expectations remain implicit, conflicting, or poorly articulated. Artificial intelligence cannot correct unclear intent. It can only amplify clarity already present. ClurQ therefore applies AI at the requirement intelligence layer rather than post-facto screening alone.

ClurQ transforms role understanding through structured problem framing. Hiring teams define outcome expectations, execution context, stakeholder exposure, and capability priorities using guided inputs rather than free-text descriptions. This creates a consistent intelligence baseline upon which AI models operate. Screening logic, evaluation weighting, and candidate ranking align with actual delivery needs rather than generic job labels.

This structured intelligence reduces downstream noise significantly. Candidate pools shrink intentionally, while relevance improves materially. Recruiters spend less time filtering mismatched profiles. Hiring managers engage with candidates reflecting true role intent. Quality improves before evaluation even begins.

Technology supporting requirement intelligence enables this shift. Once role clarity converts into structured intelligence, AI screening gains precision. How ClurQ applies artificial intelligence toward signal amplification across candidate evaluation naturally follows within the next section, maintaining narrative continuity.

Elevating Screening Accuracy Through Signal-Driven AI Models

With role intelligence structured upstream, ClurQ applies artificial intelligence toward screening precision rather than resume elimination. Conventional AI screening relies heavily upon keyword density and historical hiring patterns, which often replicate bias and reward familiarity over capability. ClurQ’s approach prioritises signal extraction aligned with role-specific success criteria.

Screening models evaluate candidates against capability indicators mapped during requirement framing. Project exposure, decision complexity, execution context, and learning velocity receive higher relevance weighting than pedigree signals. AI operates as a relevance amplifier, surfacing candidates whose experience patterns align with real delivery expectations rather than surface-level similarity.

This approach materially improves shortlist quality. Candidate pools become smaller yet stronger. Recruiters regain confidence within AI outputs, since surfaced profiles demonstrate contextual fit rather than statistical proximity. Hiring managers spend time evaluating substance rather than filtering noise, improving downstream decision efficiency.

Crucially, ClurQ avoids black-box screening outcomes. Signal logic remains explainable, enabling stakeholders understand why candidates surface. Transparency strengthens trust across AI-assisted hiring journeys, reducing resistance toward algorithm-supported decisions.

Infrastructure enabling explainable screening plays a central role here. As screening accuracy improves, evaluation depth becomes the next differentiator. How ClurQ integrates artificial intelligence within assessment and scoring frameworks flows naturally into the next section without narrative disruption.

Strengthening Evaluation Quality Through AI-Assisted Evidence Scoring

Once screening accuracy improves, ClurQ extends artificial intelligence into evaluation quality rather than interview automation. Traditional interviews generate inconsistent evidence, influenced by interviewer bias, preparation variance, and conversational dominance. ClurQ introduces AI as an evidence standardisation layer, improving decision reliability without replacing human judgement.

Evaluation frameworks within ClurQ anchor around task-based and scenario-led assessments aligned with real role execution. AI assists by analysing output quality, reasoning depth, and structural clarity across submissions. Scoring remains role-specific, weighted according to capability priorities defined earlier. This creates comparability across candidates while preserving contextual nuance.

AI-assisted scoring also enhances interviewer effectiveness. Feedback templates, signal prompts, and evidence flags guide interviewers toward consistent evaluation focus. Subjective impressions reduce, while observable indicators gain prominence. Decision discussions become evidence-led rather than opinion-driven.

Importantly, ClurQ maintains explainability across AI involvement. Stakeholders review scoring rationale, evidence highlights, and confidence indicators before final decisions. This transparency strengthens adoption while protecting accountability.

Platforms supporting structured evaluation enable this model. With evaluation discipline strengthened, governance alignment becomes essential. How ClurQ ensures AI-supported decisions remain accountable and auditable transitions seamlessly into the next section without breaking narrative flow.

Governing AI Decisions Through Accountability And Human Oversight

As AI-assisted evaluation strengthens hiring signal quality, governance discipline becomes critical. Without clear accountability structures, artificial intelligence risks becoming an authority proxy rather than a decision aid. ClurQ addresses this challenge through deliberate design choices ensuring every hiring outcome remains owned, reviewable, and defensible.

ClurQ embeds AI within governed decision loops rather than autonomous flows. Recruiters, hiring managers, and business leaders retain explicit responsibility across each approval stage. AI outputs surface insights, confidence markers, and risk flags, yet final judgement always sits with accountable stakeholders. This structure prevents blind reliance while preserving efficiency gains.

Governance visibility further strengthens trust. Every recommendation carries traceable rationale, linked evidence, and scoring context. Decision discussions reference shared data rather than fragmented opinions. Over time, teams develop consistent decision language, reducing friction across cross-functional hiring panels.

This governance-first approach also supports compliance and audit readiness. Organisations retain clarity across why specific candidates progressed, declined, or received offers, supported through documented evidence rather than retrospective justification.

Infrastructure enabling accountable oversight plays a central role here. With governance confidence established, organisations unlock scale without fear. How ClurQ enables AI-powered hiring expansion while protecting quality continuity flows naturally within the next section, without narrative interruption.

Scaling High-Quality Hiring Without Signal Degradation

With governance controls firmly established, ClurQ enables organisations scale AI recruitment without eroding talent quality. Most hiring systems fail during expansion phases, where increased requisition load overwhelms evaluation discipline. ClurQ addresses this challenge through repeatable intelligence layers rather than manual dependency.

AI models within ClurQ continuously learn from validated hiring outcomes. Signal weightings refine based upon post-joining performance indicators, ramp stability, and early delivery success. This feedback loop strengthens relevance across future screening and evaluation cycles. Quality therefore compounds rather than dilutes during scale expansion.

Operational consistency also improves through configurable hiring architectures. Core evaluation stages remain fixed, while depth adapts according role complexity and risk exposure. High-impact roles receive deeper validation, while lower-risk positions move efficiently without compromising baseline standards. Recruiters manage volume without sacrificing judgement quality.

Equally important, hiring velocity improves organically. Reduced rework, lower dropout rates, and stronger shortlist confidence accelerate closure without artificial pressure. AI acts as stabilising infrastructure, absorbing scale complexity while preserving decision clarity.

Platforms supporting scalable intelligence orchestration underpin this capability. Once scale stability emerges, attention naturally shifts toward candidate experience under AI-driven hiring. How ClurQ preserves fairness, clarity, and trust across automated touchpoints continues seamlessly within the next section.

Preserving Candidate Trust Within AI-Driven Hiring Journeys

As AI recruitment scales, candidate trust becomes increasingly fragile. Automation without transparency often creates perceived opacity, disengagement, and fairness concerns. ClurQ recognises that talent quality improvement depends not only upon evaluation accuracy but also upon candidate confidence across the hiring journey.

ClurQ designs AI-assisted interactions around clarity rather than mystery. Candidates receive upfront visibility around evaluation structure, assessment purpose, and decision timelines. Automated communication remains contextual, reflecting stage progression and expectation alignment rather than generic system messaging. This reduces uncertainty and improves engagement depth.

Fairness remains a central design principle. AI models operate upon structured role intelligence and evidence-based scoring, reducing arbitrary elimination. Explainable outcomes ensure candidates understand progression rationale, even when unsuccessful. This transparency preserves employer credibility and long-term brand trust.

Recruiters retain active communication ownership. AI assists with timing, reminders, and information consistency, yet human presence remains visible across key moments. This balance reinforces authenticity within technology-enabled hiring systems.

Platforms supporting candidate transparency enable this experience. With candidate confidence preserved, AI recruitment evolves beyond operational support. Its strategic value emerges through insight generation, which transitions naturally into the next section without narrative break.

Converting Hiring Data Into Strategic Talent Intelligence

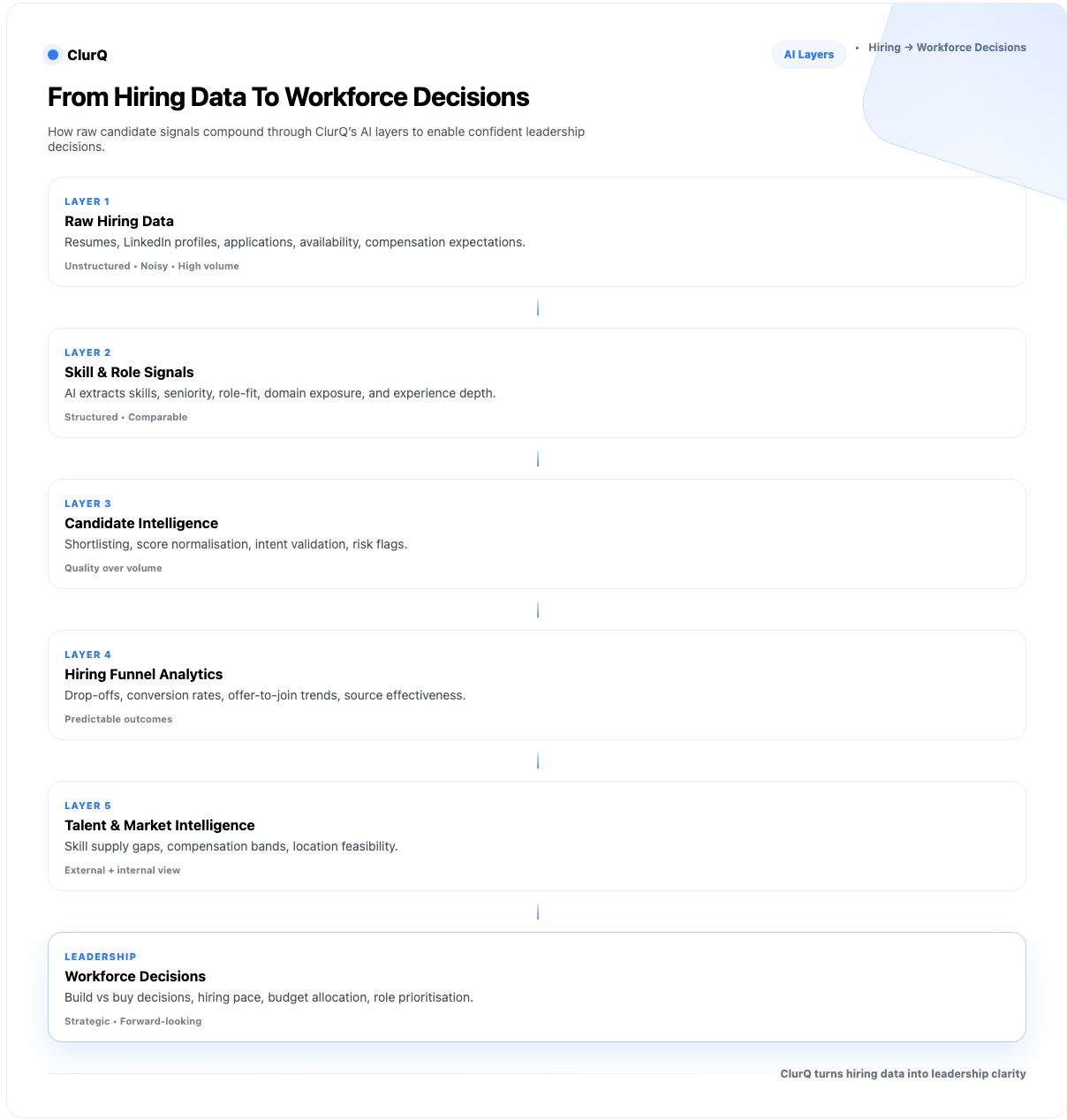

With candidate trust sustained across AI-enabled workflows, ClurQ extends value through insight generation rather than process support alone. Hiring data, when structured correctly, evolves into strategic talent intelligence guiding workforce planning, risk anticipation, and capability investment decisions. Most organisations collect hiring metrics. Few translate them into actionable intelligence. ClurQ closes this gap.

AI within ClurQ aggregates patterns across sourcing quality, assessment outcomes, joining ratios, and early performance indicators. These signals surface role-level and function-level insights. Leaders gain visibility around skills facing scarcity pressure, roles carrying higher delivery risk, and evaluation stages creating friction. Decision-making shifts from reactive hiring toward proactive capability planning.

Recruiting conversations therefore move upstream. Talent discussions inform roadmap sequencing, budget allocation, and team composition choices. Hiring teams adjust expectations early rather than compensating late through rushed closures or compromised quality. This intelligence layer strengthens organisational resilience during growth and uncertainty cycles.

Insight accessibility remains critical. Dashboards translate complex data into decision-ready narratives, enabling leadership engagement without analytical overhead. AI supports pattern recognition, while humans retain interpretation authority.

Platforms enabling intelligence visibility support this transformation. As intelligence maturity increases, organisations begin measuring success differently. Focus shifts from closure counts toward long-term talent impact, which continues seamlessly into the next section.