Tech Hiring: What Actually Changes Results

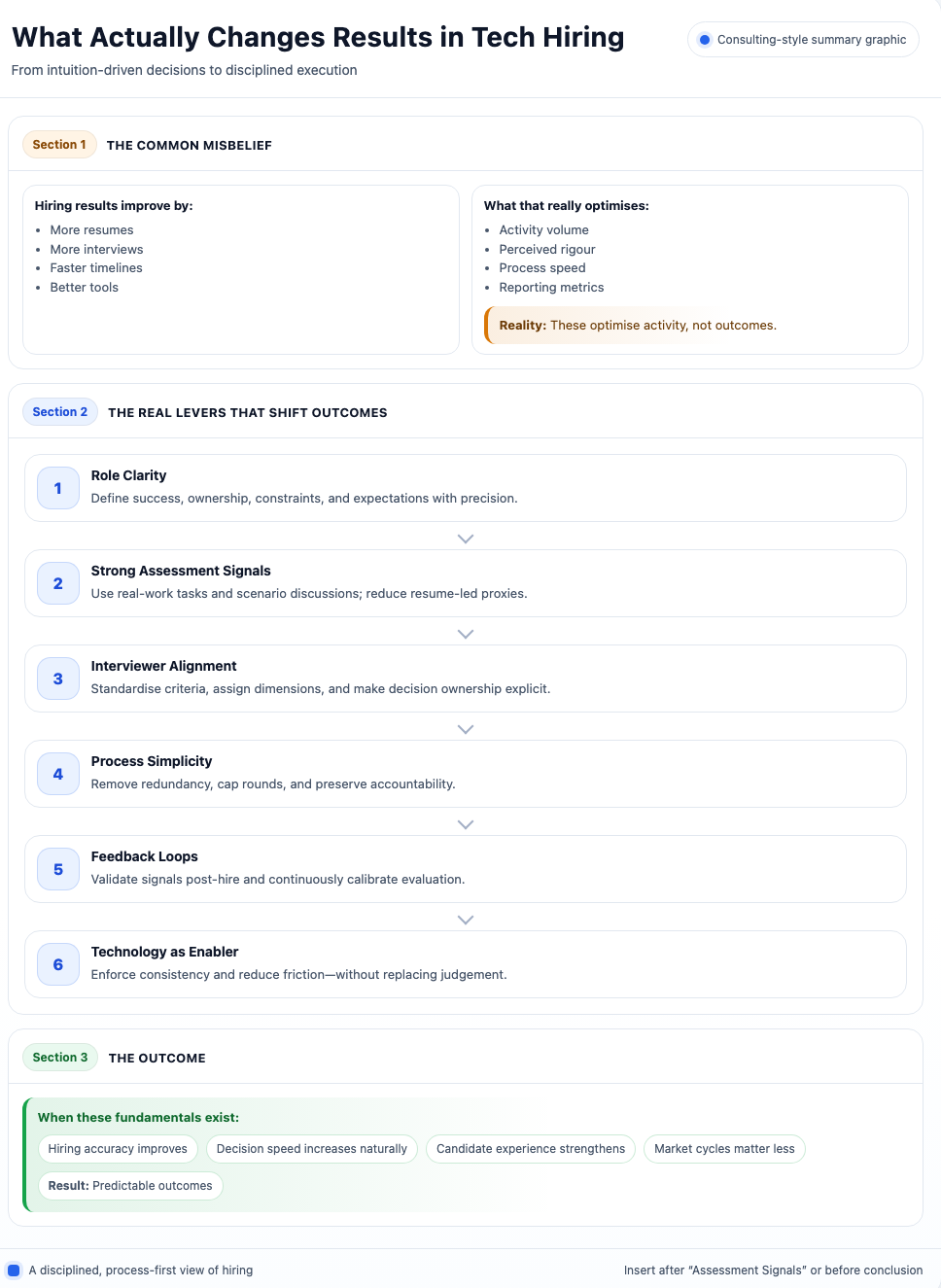

Tech hiring discussions often focus attention on surface-level levers—salary benchmarks, sourcing platforms, employer branding or interview formats. While these elements influence outcomes, they rarely change results in a sustained manner. Organisations that consistently hire strong technical talent operate through structured hiring workflows that prioritise clarity and evidence over volume or brand-driven comfort. Their advantage lies not in scale or spending, but in clarity.

High-performing hiring systems begin with precise role definition. Most hiring failures originate upstream, where expectations remain vague, contradictory, or inflated. When role outcomes, success metrics, and decision rights remain unclear, even experienced interviewers default toward proxy signals such as pedigree, prior compensation, or brand familiarity. These signals create comfort, not accuracy.

Another decisive factor involves signal quality during evaluation. Resumes, keyword matching, and conversational interviews provide weak evidence about on-the-job performance. Teams that change results shift assessment closer toward real work. Short problem-solving tasks, scenario-based discussions, and context-rich evaluations surface capability faster while reducing bias.

Finally, speed matters, but not in isolation. Faster processes only work when alignment already exists across stakeholders. When hiring managers, recruiters, and interviewers share a common definition of “good,” decision velocity improves naturally. Without alignment, speed amplifies errors.

Tech hiring improves when organisations stop optimising steps and start correcting fundamentals. Results follow clarity, not complexity..

What Most Tech Hiring Processes Get Wrong

Tech hiring failures rarely stem from talent shortages. Instead, they arise from how organisations define, evaluate, and decide. Many teams assume that better tools or larger pipelines will compensate for weak judgement. Evidence suggests otherwise. When hiring outcomes disappoint, root causes almost always trace back toward process design rather than market conditions.

A common misstep involves treating hiring as a transactional activity. Roles are opened reactively, timelines appear compressed, and recruiters receive incomplete context. Under such conditions, speed becomes priority, while quality becomes aspiration. Hiring managers rely heavily upon intuition, pattern recognition, or familiarity, believing experience alone ensures accuracy. Unfortunately, intuition scales poorly across diverse technical roles.

Another recurring issue concerns consensus illusion. Interview panels often appear aligned, yet individual members apply different evaluation criteria. One interviewer prioritises system design depth, another values communication, while someone else focuses entirely upon previous company logos. Without explicit calibration, final decisions represent compromise rather than conviction.

Process complexity further compounds problems. Multiple interview rounds, overlapping evaluations, and excessive stakeholder involvement slow momentum without improving signal strength. Candidates disengage, strong talent exits pipelines, and teams rationalise outcomes by citing “market realities.”

Organisations that change results confront uncomfortable truths. They simplify processes, remove redundant steps, and enforce discipline around evidence. They accept that fewer interviews with stronger signal outperform elaborate frameworks with weak insight. Most importantly, they acknowledge that hiring quality reflects organisational clarity, not candidate availability. When teams address these structural gaps, hiring outcomes improve predictably, regardless of market cycles.

Across organisations that improve outcomes, one pattern appears repeatedly: teams invest disproportionate effort clarifying roles before sourcing candidates.

Why Role Clarity Drives Hiring Outcomes

Role clarity represents the most underestimated lever within tech hiring. Organisations frequently assume clarity exists because job descriptions circulate internally or positions receive approval through formal workflows. In practice, clarity remains superficial. Teams agree upon titles and skill lists but rarely align upon outcomes.

Effective hiring begins by defining success conditions with precision. What will strong performance look like after six months? Which problems must this role solve independently? Where does accountability start and end? Without answers, interviewers unconsciously substitute personal benchmarks. Decisions then reflect individual bias rather than organisational need.

Ambiguity also distorts candidate assessment. When expectations remain fuzzy, evaluators overweight visible experience rather than relevant capability. Years worked, prior employers, or familiar technologies become shortcuts. These shortcuts feel efficient, yet they disconnect hiring decisions from real operating requirements.

Clear roles also improve candidate quality indirectly. Strong candidates self-select toward opportunities where expectations feel explicit and realistic. Vague roles attract volume, not fit. Precision repels unsuitable profiles early, conserving time across teams.

Leading organisations formalise role clarity before initiating outreach. They document problem statements, execution constraints, collaboration interfaces, and learning expectations. Interview questions then map directly toward these dimensions, reducing variance across evaluators.

Role clarity does not slow hiring. It accelerates confidence. When teams agree upon what success means, decisions require less debate and fewer corrective hires. Over time, this discipline compounds, producing consistent hiring outcomes independent of recruiter strength or interview panel composition.

Clarity, not volume, determines hiring reliability.

Assessment Signals That Predict Real Performance

If role clarity defines what success looks like, assessment quality determines whether teams can reliably identify it. Many hiring processes collapse at this stage. Despite clear intentions, organisations continue relying upon signals poorly correlated with actual job performance.

Resumes illustrate the problem clearly. They summarise exposure, not execution. Keyword matches suggest familiarity, not depth. Conversational interviews test articulation more than applied judgement. These signals feel efficient because they are familiar, yet familiarity does not equal validity.

Teams that improve hiring outcomes deliberately redesign assessment around evidence. Instead of asking candidates what they have done, they observe how candidates think and work. Short, scoped tasks simulate real constraints without turning hiring into unpaid labour. Scenario discussions test decision-making under ambiguity. Architecture reviews expose trade-offs, not memorised patterns.

Importantly, stronger signals simplify decisions. When evidence reflects role reality, interviewers converge faster. Disagreement reduces because judgement anchors upon observable behaviour rather than interpretation. This alignment lowers false negatives while protecting against confident underperformance.

Assessment redesign also improves candidate experience. Strong candidates prefer meaningful evaluation over repetitive questioning. They perceive fairness when expectations mirror actual work. Over time, this reputation compounds, attracting higher-quality talent organically.

Hiring accuracy improves when organisations stop collecting more signals and start collecting better ones. Quality evidence, aligned with clear roles, transforms hiring from opinion-driven selection into repeatable judgement.

Why Speed Only Works After Alignment

Once assessment signals improve, many organisations attempt accelerating hiring. Speed becomes headline metric, yet acceleration without alignment often worsens outcomes. Fast decisions amplify existing flaws rather than correct them. The difference between effective speed and reckless haste lies in shared understanding.

Alignment begins with a common definition of quality. When interviewers evaluate against identical success criteria, decisions converge naturally. Without alignment, faster cycles merely compress debate. Interview feedback becomes contradictory, and final decisions depend upon seniority rather than evidence.

Well-aligned teams reduce friction by design. Interview roles remain distinct. Evaluation dimensions remain explicit. Each interviewer knows which signal they own and which decisions fall outside their scope. This discipline prevents overlap while preserving accountability.

Speed also depends upon decision ownership. High-performing organisations assign clear hiring authority. Committees advise, but owners decide. Ambiguity around ownership delays closure, increases candidate drop-off, and diffuses responsibility when outcomes disappoint.

Importantly, aligned speed benefits candidates as well. Predictable timelines, fewer interviews, and decisive communication signal organisational maturity. Strong candidates respond positively toward clarity and respect.

When alignment exists, speed emerges as outcome rather than objective. Teams move quickly because decisions feel obvious, not because deadlines demand urgency. Sustainable hiring velocity results from shared judgement, not compressed timelines.

Organisations that internalise this principle hire faster without sacrificing quality, even during competitive cycles.

The Hidden Cost of Over-Engineering Hiring

As organisations attempt reducing hiring risk, many introduce additional layers: more interviews, more stakeholders, more frameworks. The intention remains sound, yet outcomes often deteriorate. Over-engineered hiring processes increase effort without improving accuracy.

Each added step introduces noise. Interview fatigue lowers signal quality for both candidates and evaluators. Redundant conversations surface similar information, framed differently, creating false contradictions. Decision-makers then resolve confusion through hierarchy rather than evidence.

Complexity also weakens accountability. When many people participate, ownership dilutes. No single evaluator feels responsible for final outcomes. Poor hires become collective failures, reducing incentive for learning or correction.

High-performing organisations move deliberately toward simplicity. They cap interview rounds. They remove steps that fail improving decision confidence. They treat hiring like any other system: measuring input value relative toward output quality.

Importantly, simplicity does not mean leniency. Strong hiring systems remain selective, yet focused. Each interaction exists for a defined purpose. Every signal maps directly toward role success. Anything else disappears.

Candidates notice this discipline immediately. Efficient processes signal respect, seriousness, and confidence. Strong talent interprets excessive complexity as internal indecision or risk aversion.

When organisations simplify hiring, accuracy improves while costs decline. Fewer interviews, clearer decisions, stronger outcomes. Engineering restraint, not expansion, separates effective hiring systems from bureaucratic ones.

Why Hiring Systems Fail Without Feedback Loops

Even well-designed hiring processes degrade without feedback. Many organisations treat hiring decisions as terminal events rather than hypotheses requiring validation. Once a candidate joins, attention shifts toward delivery, leaving hiring assumptions unexamined. Over time, errors repeat quietly.

Effective hiring systems incorporate feedback deliberately. They track post-hire performance against original role expectations. Which signals predicted success? Which indicators misled evaluators? Without this reflection, teams continue optimising intuition rather than evidence.

Feedback also corrects interviewer bias. Interviewers rarely receive visibility into outcomes, allowing confidence remain unchecked. When evaluators understand where judgement aligned or failed, calibration improves naturally. Hiring quality then compounds through learning rather than turnover.

Another overlooked feedback mechanism involves candidate data. Drop-off points, offer declines, and interview abandonment reveal structural issues. Slow responses, unclear communication, or excessive steps surface clearly through candidate behaviour. Organisations that ignore this data misdiagnose problems as talent shortages.

Leading organisations formalise expectations early through outcome-driven role definition, ensuring interview questions and evaluation criteria reflect real operating needs rather than generic skill lists.

Short reviews after defined periods assess decision accuracy, process friction, and signal reliability. These insights feed directly into role design, assessment structure, and interviewer training.

Hiring excellence depends less upon initial design than ongoing correction. Systems improve when organisations treat hiring as measurable work, not discretionary judgement. Feedback transforms hiring from episodic activity into institutional capability.

Technology Helps Only After Process Discipline

Following feedback integration, many organisations expect technology automatically elevate hiring quality. This expectation often disappoints. Tools amplify existing behaviour; they do not correct weak fundamentals. Without process discipline, technology accelerates noise rather than insight.

Applicant tracking systems illustrate this clearly. When roles lack clarity and assessments lack signal, automation simply organises poor decisions efficiently. Dashboards fill with activity metrics while outcome quality remains unchanged. Volume increases, confidence declines.

High-performing organisations reverse this sequence. They stabilise process first, then introduce technology selectively. Tools support defined workflows, enforce consistency, and remove manual friction. They do not replace judgement; they protect it.

Technology proves most valuable when used sparingly. Structured scorecards reduce variance. Automated scheduling preserves candidate experience. Centralised feedback improves accountability. Each intervention addresses a specific failure point rather than promising universal improvement.

Importantly, restraint matters. Excessive tooling introduces dependency and reduces interviewer ownership. When systems dictate decisions, evaluators disengage intellectually. Strong hiring requires human judgement supported by structure, not overridden by software.

Candidates also respond better toward disciplined systems. Clear communication, predictable timelines, and purposeful interactions signal maturity. Technology becomes invisible, allowing experience remain personal despite scale.

Hiring outcomes improve when organisations treat technology as infrastructure, not strategy. Process discipline defines direction; tools merely enable execution. Without discipline, no software meaningfully changes results.

Why Market Conditions Matter Less Than Execution

Economic cycles frequently dominate hiring narratives. During slowdowns, organisations blame talent scarcity or candidate risk. During upswings, they attribute success toward favourable markets. This framing obscures a more consistent truth: execution quality outweighs external conditions.

Organisations with strong hiring fundamentals perform across cycles. They adapt expectations without compromising clarity. They recalibrate compensation without distorting role definition. Most importantly, they maintain evaluation discipline when pressure rises.

Market volatility exposes weak systems. When demand increases, unclear roles attract unsuitable volume. When conditions tighten, risk aversion amplifies bias. Teams then default toward safe profiles rather than suitable ones. These reactions appear rational, yet they reflect process fragility rather than market reality.

Execution discipline creates resilience. Clear roles prevent overcorrection. Strong assessment signals preserve confidence. Aligned decision-making enables speed without panic. These capabilities stabilise outcomes regardless of hiring environment.

Data reinforces this pattern. Organisations tracking hiring accuracy observe consistent performance variance driven by internal execution rather than external supply. Teams with weak processes experience extreme swings, while disciplined systems remain steady.

This distinction matters strategically. Leaders who misattribute hiring outcomes toward market conditions invest energy incorrectly. They chase tools, vendors, or branding initiatives instead of fixing execution gaps.

Hiring success depends less upon timing than operational maturity. Markets change. Execution endures.

What Actually Changes Results in Tech Hiring

Across organisations, tech hiring success appears complex but follows a consistent pattern. Outcomes improve when teams stop chasing incremental optimisations and start correcting foundational weaknesses. The most reliable improvements originate upstream, long before resumes enter pipelines or interviews begin.

Role clarity anchors judgement. Assessment quality supplies evidence. Alignment enables speed. Simplicity preserves accountability. Feedback drives learning. Technology supports, but never substitutes, disciplined execution. Each element reinforces others, forming a coherent system rather than isolated tactics.

Organisations that change results treat hiring as strategic work. They invest thought before action. They prioritise evidence over comfort. They resist unnecessary complexity and challenge intuition when data disagrees. Over time, hiring becomes predictable rather than reactive.

Importantly, these principles scale across company size and hiring volume. Startups gain focus. Large enterprises regain consistency. Market conditions lose influence as internal capability strengthens.

Tech hiring rarely fails due talent shortages. It fails when organisations accept ambiguity, tolerate weak signals, and avoid accountability. Correct these fundamentals, and outcomes shift reliably.

The lesson remains straightforward but demanding. Better hiring does not require more effort. It requires better thinking. Organisations willing invest clarity, discipline, and learning will consistently outperform those searching shortcuts.

Results change not through innovation alone, but through execution done well, repeatedly.